University of California San Diego, Center for Wireless Communications

Smart and Connected Vehicles and Roads: Real-time Data Fusion, mmWave V2X and Massive MIMO

Principal Investigators: Sujit Dey, Dinesh Bharadia, Truong Nguyen, Bhaskar Rao, Xinyu Zhang

Postdoctoral Scholar: Sabur Baidya

Introduction

Vehicles are constantly adding more sensors of various types every day, enabling real-time sensing of the environment in order to provide advanced driver assistance and autonomy. It is expected that by making vehicles more intelligent, the number of injuries and deaths that occur on roadways every year can be greatly mitigated. Yet, despite these advances in sensing, a single vehicle will always be limited by the fact that it can only perceive its environment from a single perspective and therefore never gets a full observation of its surroundings due to occlusion, specular reflections, and limited sensing ranges.

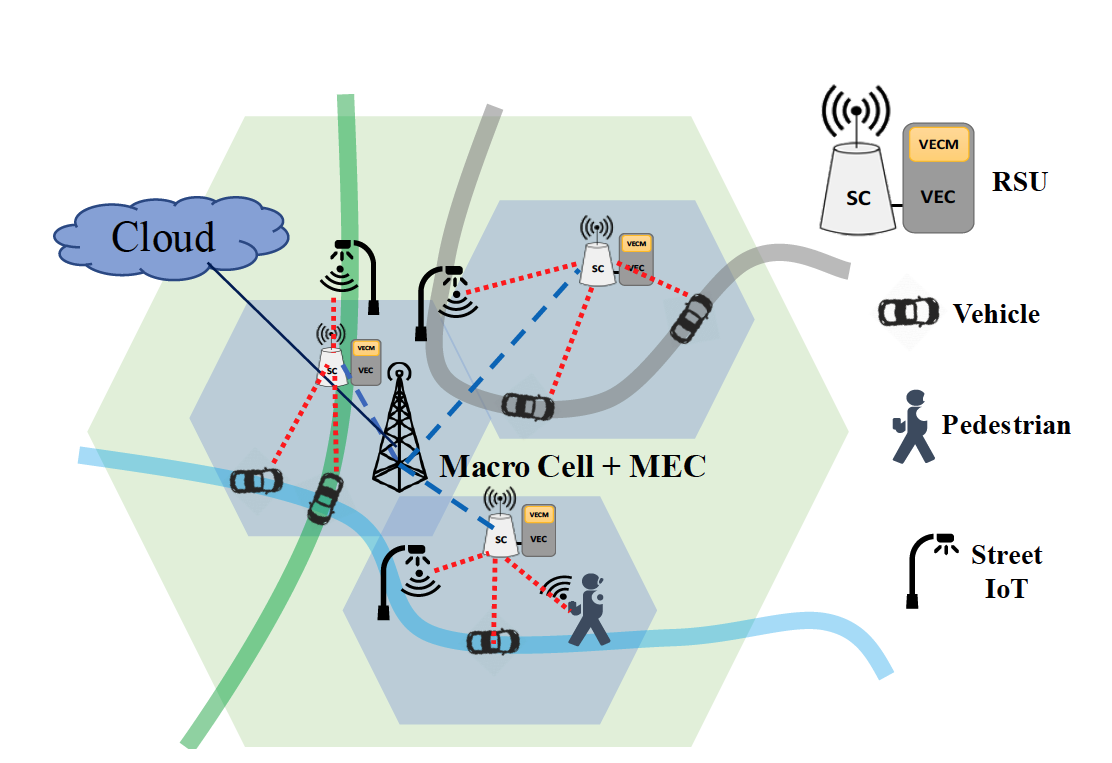

Fig. 1. Distributed edge computing and communications architecture, based on C-V2X and mmWave-V2X

Based on another simultaneous development – the steady deployment of cameras and other types of sensor IoTs on roads - there exists a unique opportunity to network neighboring vehicles and road sensors in order to create a more comprehensive perception of the environment that allows for enhanced road safety and efficiency for all who use it. By leveraging other vehicle’s and road IoT sensing, vehicles can effectively see through other vehicles and see around walls. While the above can be potentially achieved by real-time streaming of all neighboring vehicle and road sensor data to, and processing at, each vehicle using evolving V2X technologies, this will involve prohibitively high communication and computing requirements for each vehicle. Instead, aware of the increasing deployment of small cells in roads, we are developing a novel edge-cloud based collaborative data fusion and perception system, where vehicle and road sensor data are real-time streamed to, and fused and processed for collaborative perception at nearby road-side units (RSU), consisting of small cell and edge computing node, instead of at individual vehicles. Figure 1 shows our proposed architecture consisting of a distributed but overlapping network of road-side units (RSU), with each RSU responsible for the vehicles and road IoTs in its own coverage area using V2X connectivity.

To enable the above collaborative data fusion and perception system, we propose two distinct areas of research in this project cycle: i) real-time data fusion from the predominant sensors on vehicles such as cameras and radars and ii)high throughput and low latency communications to stream the data between vehicle sensors, road IoT sensors,and edge computing nodes (RSUs) for real-time data fusion. In addressing the former, we first propose to fuse data from multiple camera systems in order to create 3D awareness of the environment, and then go on to propose how radars can be used to further extend this capability. The communications component is also split into two overlapping tracks, representing two promising directions for wireless communications and their application to vehicles: mmWave and massive MIMO. Specifically, to satisfy ultra-high throughput and ultra-low latency requirements of real-time sensor data streaming and processing, we propose to research and develop the use of mmWave to support V2X, besides the use of cellular-based V2X. The proposal concludes with a discussion of the current progress in developing a testbed to analyze these solutions and discusses future work to deploy alongside our city partners. Note that the testbed is in collaboration with the partners of the Smart Transportation and Innovation Program (STIP), and is also partly supported by a recent grant from NSF ($2M CNS-1925767). Further, while data security, privacy and ethics are important considerations, they are beyond the scope of this project, we plan to set up affiliated projects investigating them in association with this project.

3D Feature Matching and Applications in Collaborative Vehicles

3D Feature Matching

Finding geometric correspondences is a key step in many computer vision applications. This task lies in both 2D and 3D regimes based on the type of input data source. Compared to 2D data, in most cases image frames, 3D data has an additional dimension containing depth information. The depth data tells the exact location of each pixel in the real-world coordinate system and removes many 2D ambiguities like scale or skew differences caused by camera movement.

The common forms of 3D data are: point cloud, surface mesh, and primitive voxel. The later 2 can be generated from point cloud with additional processing. While being the direct output from most capturing devices like RGB-D camera or LiDAR, point cloud is a challenging data source with its large scale and order-less nature. A typical point cloud can be populated with millions of points and shuffling their order should not introduce any change to the cloud.

The current state-of-the-art algorithms [Gojcic et al., 2019][Choy et al., 2019] for finding correspondences between two point clouds include two steps: voxelization and feature extraction. A carefully handcrafted technique is picked to convert local point cloud around each key point into a voxel grid. This voxelization step not only addresses the order-less issue but allows the following extraction network to take the advantage of the spatial information as well. The extraction network consists of multiple 3D convolution layers and outputs a condense vector (e.g., 32-dim) describing the input key point. When matching two 3D key points, we compare their corresponding descriptors.

We propose to replace the currently handcrafted voxelization step with a compact neural network. This replacement is designed to remove the bottleneck in processing speed and improve the algorithm’s robustness against noise, different sampling density, which are common in 3D data.

Applications in Collaborative Vehicles

Estimating vehicle trajectory

3D feature matching can help with more accurate vehicle localization [Li et al., 2018][Behl et al., 2019]. Suppose we have a static point cloud data of the entire city or area. Such large-scale static city/area point cloud can be generated by driving a vehicle with LiDAR around or from multiple capturing devices installed on street lamps. From the large point cloud we crop a small portion based on estimated location from vehicle GPS/IMU data. The cropping largely reduces the computation. We then find correspondences between point cloud from the vehicle’s camera and this cropped city point cloud and estimate the relative rigid transformation. This transformation will help refine the localization by GPS/IMU data.

View sharing between vehicles

Sharing views among vehicles is the main advantage of a collaborative vehicle system. Occlusions and detection flaws may cause serious traffic accidents. View sharing [Straub et al., 2017] will eliminate such problems greatly. Whether the sharing happens between two vehicles or between a vehicle and a centralized node, we need to merge the information in the source vehicle coordinate system to target vehicle/centralized node coordinate system. Fast and accurate 3D feature matching, together with GPS/IMU data will provide an accurate transformation for merging.

3D understanding

While collaborative vehicles share their location and plans to each other, it is challenging to ask pedestrians and cyclists to provide such information. We therefore need to detect them, even predicting their next moves. We can send alerts to both vehicles and pedestrians/cyclists if we can predict their future locations. Such a task requires knowledge of semantic segmentation, classification, and temporal reasoning [Halber et al., 2019]. We plan to investigate the performance of predicting pedestrians/cyclists future position from current and previous point clouds.

Timeline

1-8 months: Fast and accurate 3D feature matching

9-13 months: Estimating vehicle/camera trajectory

14-18 months: View sharing between vehicles

19-24 months: 3D understanding

All weather 3D perception for Intelligent vehicles using cooperative radars

Motivation

Why use wireless signals (radars) for perception in extreme weather?

Camera and LiDAR are light-based techniques and therefore cannot perceive the environment efficiently in extreme weather conditions. Light-based sensing modalities are easily affected by suspended dust or snow due to the extremely small wavelength of light (nanometers). The figure below shows an example of limited sensing due to snow conditions. Therefore, the perception with these sensors is limited to good weather. In contrast to light-based sensing, the wireless signals can penetrate through extreme weather conditions without getting much affected and therefore provide the ability to perceive the environment in all-weather. Therefore, the goal for this project is to enable radar-based 3D geometry perception in extreme conditions, and a fusion system with a camera which can eliminate the need for LiDAR, thereby cutting costs and increasing robustness at the same time.

Why 3D object detection?

The problem of 3D object detection is of importance in autonomous applications that require decision making or interaction with objects in the real world. 3D object detection recovers both the 6 DoF pose (relative distance and orientation in the real world coordinates) and the dimensions of an object from an image. This would enable the ability to detect objects and perceive their geometry, which includes the orientation and distance of the object from the self-driving cars in real-world coordinates.

Approach

Wireless radars suffer from sparsity, specular reflections and noisy point clouds. In order to mitigate the sparsity of the point clouds created using single radar, Prof. Bharadia’s team has proposed the use of cooperative radars which can circumvent the problem of radar point cloud sparsity, by placing multiple radars in a distributed fashion to create a large virtual aperture and capturing even more specular and diffracted reflections. This allows us to create dense point clouds, which could then be used for perception.

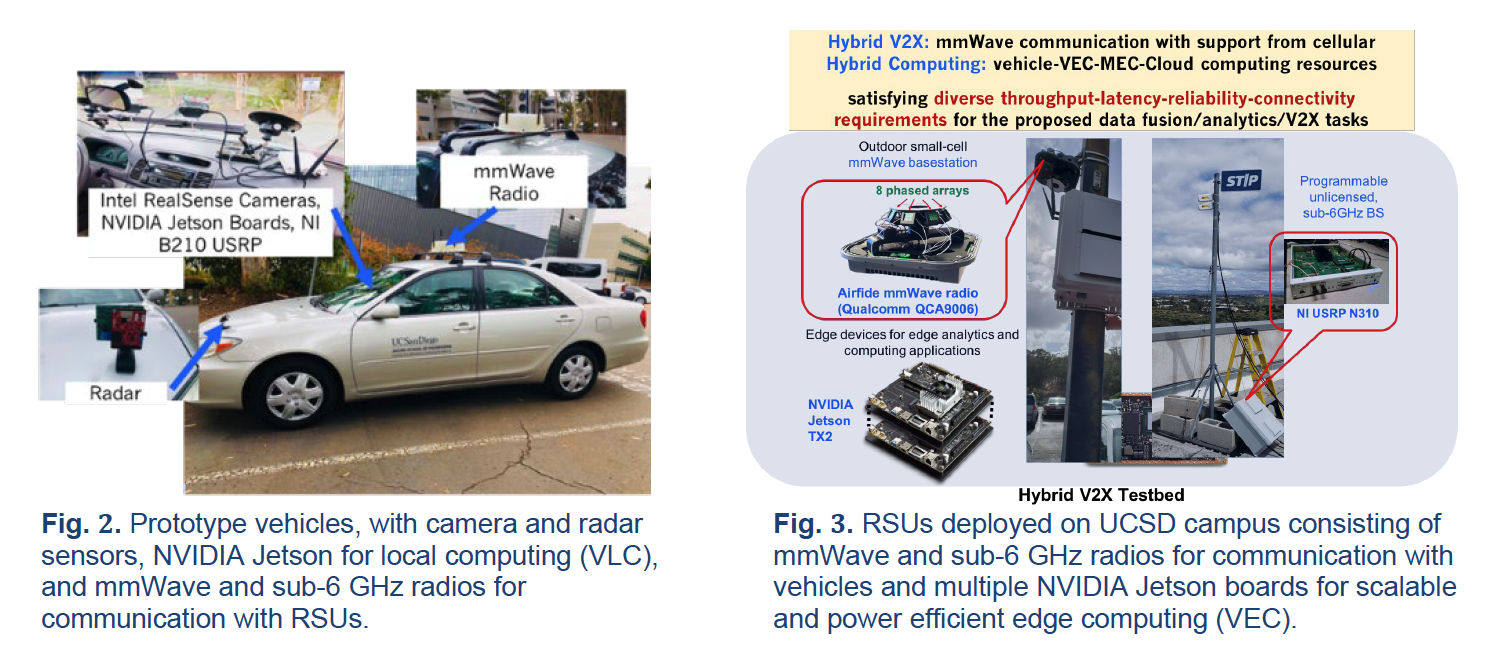

STIP Testbed

The radars deployed on the STIP vehicles (Fig. 2) are from Prof. Bharadia’s team. Furthermore, we have exclusive access to TI 1-degree radar which can be synchronized, which are unique to our group in the world. We plan to collect data with radar, Lidar synchronized with camera.

Research Tasks

Year 1: Specifically, we would leverage the dense point clouds to build deep learning algorithms in this project to enable 3D geometry perception using radars. On the other hand, Camera captures well the texture, color, and other appearance-based features, which are hard to be recognized by wireless signals. A fusion of camera and radar could enable complementary components that can fare quite well in the perception of surroundings.

Year 2: To achieve 3D geometry cooperatively i.e. 3D bounding box, depth and pose detection of objects in bad weather we would train cooperative radars using a cooperative camera as supervision input. Specifically, cameras are cheap and ubiquitous but often face difficulty to reconstruct the 3D geometry, therefore the goal would be to fuse the camera data with the radar data to enable holistic perception. We would build a low-complexity deep-learning algorithm which can be deployed on edge-TPU, and study cloud and edge separation.

Site-Specific mmWave Channel Profiling, Prediction and Network Adaptation

Usage of the mmWave frequencies is a key driver towards improving cellular network capacity for 5G and beyond. However, most of the current use cases are limited to static point-to-point settings, such as wireless HDMI and backhaul. In contrast, the anticipated 5G applications like immersive media and V2X will require mmWave deliver LTE-like coverage, stability, and mobility support. The main challenge to meet this prospect lies in the fact that mmWave link performance becomes a sensitive function of the environment, due to strong attenuation, blockage, and reflection. This project aims to explore a data driven approach to tackle the challenges. At a high level, we leverage the channel sensing data to profile and predict the spatial channel distribution in a site-specific manner. We then adapt the link and network parameters accordingly to optimize the coverage and reduce the beam management overhead. We adapt the V2X scenario as an example use case, but the approach can be generalized to other scenarios, such as indoor mmWave pico-cell networking.

Ongoing work by CWC faculty and students is already demonstrating the strong potential for mmWave V2X through trace driven simulation, experimental mmWave radio platforms, and a campus-scale reconfigurable mmWave network supported by the NSF. The proposed project will leverage these experimental environments for the design and validation of learning algorithms that can aid in network planning and enable mmWave radios to continuously learn from their environment even after deployment.

Research Questions

1. Can we leverage the advances in 3D mapping and RF channel prediction to realize highly automated and effective network planning?

2. Can we tailor the beamforming codebook design and selection algorithms to the radio environment, in order to adapt to the high mobility and unpredictable blockage?

Intelligent Network Planning through Channel Cartography

Significant optimizations can occur even before a mmWave network is deployed by performing accurate simulations of the expected environment. Typically, ray tracing simulations are used when determining coverage; however, newer methodologies are being proposed that utilize sparse measurements of the RF environment to produce even more precise results. Utilization of these simulations can allow evaluation of the network impact of various configurations that would otherwise be economically infeasible to measure, such as BS placement, antenna location on vehicles, codebook design, and phased array design.

Ray tracing simulations [Remcom, 2020] have proven to be quite accurate for mmWave frequencies. They function by utilizing a 3D model of the environment that is annotated with material types of known wireless penetration/reflection properties. These 3D models are available for most urban areas as an HD map. Combining this functionality with mobility data from a traffic simulator allows for modeling mmWave V2X scenarios [Klautau et al., 2018].

While ray tracing can be quite accurate, it can’t be perfect. Material types can easily vary amongst locations, such as the density of walls or type of paint/coatings used. Other fine-grained properties of the environment aren’t accurately captured by 3D models such as foliage or the chamfers/bevels of buildings. Prior work has shown that the reflectors in a mmWave environment, and their properties, can be sensed directly from RF measurements [Wei et al., 2017]. Clearly, incorporating these measurements into trace driven simulations can increase the accuracy of the observed simulation results and thus the conclusions drawn from them. The proposed project will examine how to incorporate sensing from other modalities, such as 3D vision, and how to leverage the knowledge gained from one geographic location to model a separate environment, in order to create more accurate RF environment models without high cost to operators during the planning stage.

Lifelong Learning at the Base Station

While accurate network planning can improve the efficiency of mmWave networks, environments inevitably change. In the long term, environments change due to seasons impacting the foliage in the environment or new construction. In the short term, networks are impacted by fluctuations in user movement/density and the introduction of dynamic, short lived, blockages. If a BS can continually learn from, and predict, its environment, it will be able to adapt to these changes and maintain or improve network capacity over time.

Codebook Adaptation Based on User Location and Demands

Due to differences in RF propagation at higher frequencies, mmWave radios typically employ phased arrays to allow for increased directional gain that more than compensates for the increased path loss at higher frequencies. Since the radios need to quickly steer these beams to communicate with different users, these radios have a discrete set of beams it can use, termed a codebook, that determines the directional gains possible and it must choose amongst that set during operation. Obviously, the choice of this codebook can have a large impact on various physical layer metrics such as SNR, coverage, and beam coherence time. Further, there is unlikely to be a one-size-fits-all codebook since the terrains and user densities that each BS is deployed in differ from one another and can even vary throughout the day due to patterns of human movement (e.g. users flow into the city in the morning and out during the evening). Therefore, it is prudent to discover algorithms that are capable of adapting the codebook over the long term operation of a BS to improve operational metrics.

High Efficiency Beam Management

In order to communicate, the BS and UEs must determine which beams from their codebooks should be used in a given situation in a process known as beam training. However, as codebook sizes become large, as the number of users increase, and as the users begin moving more quickly such that the beam alignment is short lived, the overhead of beam training becomes quite high. In order to solve this problem, a combination of compressed sensing and machine learning has been proposed [Myers et al., 2019] that can predict the current state of all beams with much lower overhead. Future work will examine how the time evolution of the channel can be leveraged in order to further reduce the overhead of beam training and make use of short lived reflections. Even though vehicles move quickly, they move in a much more predictable pattern than a handset, which could allow for determining of what the best beam will be in a future time step, completely eliminating beam training overhead.

Experimental Plan

While trace driven simulations can speed up experiments, nothing can take the place of validation on actual hardware. UCSD has been deploying, with the support of the NSF ($2M CNS-1925767), a campus scale reconfigurable mmWave network. The network allows for experimentation with Integrated Access and Backhaul, AI-driven networking stacks, is solar powered, and supports V2X.

Experimental mmWave Radio Platforms

To validate the proposed research, this project will leverage two mmWave experimental platforms that we recently developed.

Type A [Wang et al., 2020]: Partially programmable 802.11ad-compatible mmWave radio with 36-element or 288-element phased-array, able to reconfigure the codebook entries, electronically steer the beam direction, and measure the per-beam CSI in real-time. We have deployed 8 of such radios as base stations on the UCSD campus, to form a programmable mmWave network with integrated access and backhaul capabilities.

Type B [Zhao et al., 2020]: Fully programmable massive MIMO mmWave software-radio, comprised of an array of phased-arrays. Each mmWave MIMO software-radio will have (i) 4 RF front-ends supporting 60 GHz (57-64 GHz) bands. (iii) 4 programmable phased-arrays attached to the RF front-ends, each with 36 (6 × 6) or 32 antenna elements. This platform can reuse existing software-radios like USRP and WARP as baseband processing units.

Research Tasks

Year 1: The first year will focus on creating accurate environment models through channel cartography. Specifically, using the Type B radio detailed above, a sensing platform will be built that determines the environment materials’ properties of interest to RF propagation. Additional work will include expanding the sensing platform by including multi-modal data inputs such as optical sensors, investigating neural network models that inherently capture the expert knowledge known about RF propagation, and exploring techniques to leverage information gained from prior network sites to reduce future measurements needed at new locations.

Year 2: The second year will leverage the environment modeling from year 1 in order to design algorithms for efficient physical layer control of phased arrays, in both short and long time horizons. Specifically, the trade-offs between cell coverage, network throughput, and beam search space will be explored. Further, algorithms for mitigating the overhead of beam training will be developed.

High Performance, Data Driven, Massive MIMO Systems

Two avenues of inquiry will be pursued in this research to enable next generation wireless systems.

- High performance, low complexity, Massive MIMO systems

- Data driven wireless communication systems

Both these avenues of inquiry share some common elements and also have independent components worthy of inquiry as we discuss below.

Low Complexity Massive MIMO systems

A challenge for future communication systems is going to be system complexity and the associated power consumption. This problem is already evident with mm-Wave Massive MIMO systems where complexity has compelled hardware choices that compromise system performance. Our plan in this area includes the following.

-

Collocated Antennas: The goal of this work is “how to do significantly more with less,” where less refers to reduced hardware or cheap hardware or a combination. A topic in this context is novel antenna array geometries to richly sense the environment with few RF chains and the associated inference algorithms. Another topic is the use of low complexity hardware, e.g. 1 bit ADC. The non-linearity introduced calls for novel signal processing algorithms for Tx-Rx design and evaluation.

-

Distributed MIMO systems: Access point placement for a fixed infrastructure as well as systems complemented with dynamic infrastructure, such as drones, will be undertaken in this work. A significant challenge to overcome in this scenario is accounting for interference and the dynamic needs of the system capacity, which varies with user density that has significant temporal components.

Data Driven Massive MIMO Systems

- In the context of non-linearities in the system, instead of modeling the non-linearity, one can take a data driven approach. An advantage to this approach is that there is no need to accurately characterize the non-linearity, which is usually difficult. Our goal is to broaden this principle to deal with a larger class of non-linearities. Deep learning based methods become attractive to solve this problem due to their immense flexibility and universality for functional approximation. This direction of research opens up avenues of inquiry that are quite fascinating and potentially richly rewarding. In particular, one can broaden the framework to address the question of how to develop systems with cheap hardware (unknown nonlinearities) and learn the intractable model through data driven techniques. The payoff can be huge in terms of designing low complexity, high performance systems. ● Deep learning (DL) frameworks also have the opportunity to revolutionize channel modeling and characterization. For instance, Generative Adversarial Networks (GAN) can be used to replace conventional cluster based parametric statistical channel modeling and they can be also specialized to model individual cells using data driven techniques. For modeling and revealing cell channel structure, one can utilize Variational-Autoencoder (VAE) structures. The distributed massive MIMO system design is based on some channel models which can be replaced with data driven formalism which in turn should yield more accurate and effective AP placement algorithms.

- The deep learning work will benefit greatly from the experimental mmWave testbed. For the DL work, significant collaboration will happen for data collection and analysis.

STIP Testbed: Prototype of Smart and Connected Vehicles and Roads

We plan to develop a prototype testbed to enable real-world data collection and evaluation of the techniques proposed. The testbed will include multiple overlapping Road-Side Units (RSUs) consisting of small cell with sub-6G GHz and mmWave radios and edge-computing nodes (Fig. 2), vehicles equipped with the sub-6 and mmWave radios and computing resources (Fig. 3), and street IoTs (Fig. 1), like smart cameras and lidars (from Hitachi Vantara). The testbed will enable (a) real-world data collection for training the models used by our algorithms, and (b) evaluation of the performance of the data fusion/analytics algorithms as well as the mmWave and MMIMO techniques using real road latency and bandwidth needs of multiple vehicles and road users.

We have developed a preliminary testbed over the last year consisting of (a) two prototype vehicles (one example shown in Fig. 2) equipped with multiple cameras, radars, and GPS, with each vehicle having one or more vehicular local computing (VLC) device connected with USRP B210 radios for wireless communication with RSUs, and (b) two RSUs (one example shown in Fig. 3) each containing one or more vehicular edge computing (VEC) device connected to USRP N310 which acts as small cell base station. The communication between RSU and vehicle is currently realized over 4G LTE using the open-source srsLTE protocol stack over the experimental licensed frequency band, the latter helping avoid interference with coexisting cellular users. We use power efficient Nvidia Jetson boards with inbuilt GPU to prototype VLC and VEC nodes, varying the number of boards used to evaluate the impact and performance of our proposed data fusion and analytics algorithms. In the preliminary UCSD testbed, our prototype vehicles can connect to the RSUs mounted on the rooftops of Jacobs Hall and Atkinson Hall, with the small cells providing overlapping coverage to Voigt Drive and other cross streets below.

We plan to extend the current UCSD testbed capabilities with the capabilities of mmWave radios, in both our prototype vehicles as well as the RSUs. We will further develop and expand the testbed to the city of Carlsbad. The extension to Carlsbad will focus on the use case of a next generation smart intersection, including deployment of lidar and camera systems from Hitachi Vantara at a selected intersection, connected with a RSU equipped with both sub-6 GHZ (C-V2X from Qualcomm) as well as our mmWave radio. The Carlsbad deployment will allow us to collect real-time data on pedestrians and vehicles approaching the intersection, develop AI-based models to predict intent of pedestrians and vehicles to enter the intersection, and demonstrate the usefulness of the collaborative perception algorithms to send timely preventative warnings to pedestrians and vehicles to avoid potential collisions. The prototype deployment will also allow us to test, validate and demonstrate in real-world environments our proposed mmWave and MMIMO techniques in being able to satisfy the ultra-high bandwidth and ultra-low latency requirements of the smart intersection use case. We are also in conversation with SANDAG who has taken interest in the project, as well as our partner cities City of Chula Vista and San Diego, and hope to expand our prototyping and deployment opportunities elsewhere.

References

[Behl et al., 2019] Behl, Aseem, et al. "Pointflownet: Learning representations for rigid motion estimation from point clouds." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019

[Choy et al., 2019] Choy, Christopher, Jaesik Park, and Vladlen Koltun. "Fully Convolutional Geometric Features." Proceedings of the IEEE International Conference on Computer Vision. 2019.

[Gojcic et al., 2019] Gojcic, Zan, et al. "The perfect match: 3d point cloud matching with smoothed densities." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019.

[Halber et al., 2019] M Halber, et al. " Rescan: Inductive Instance Segmentation for Indoor RGBD Scans" Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019.

[Klautau et al., 2018] Klautau, A., Batista, P., Gonzalez-Prelcic, N., Wang, Y., and Heath Jr., R.W. (2018). 5G MIMO data for machine learning: Application to beam-selection using deep learning. In 2018 Information Theory and Applications Workshop, San Diego, pages 1–1.

[Li et al., 2018] Li, Peiliang, and Tong Qin. "Stereo vision-based semantic 3d object and ego-motion tracking for autonomous driving." Proceedings of the European Conference on Computer Vision (ECCV). 2018.

[Myers et al., 2019] Myers, N. J., Wang, Y., Gonzalez-Prelcic, N., and Jr, R. W. H. (2019). Deep learning-based beam alignment in mmWave vehicular networks.

[Remcom, 2020] Remcom (2020). Wireless EM propagation software. https://www.remcom.com/wireless-insite-em-propagation-software. Accessed: 2020-03-06.

[Straub et al., 2017] Straub, Julian, et al. "Efficient global point cloud alignment using Bayesian nonparametric mixtures." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017.

[Wang et al., 2020] Wang, S., Huang, J., Zhang, X., Kim, H., and Dey, S. (2020). X-array: Approximating omnidirectional millimeter-wave coverage using an array of phased arrays. In Proceedings of the 26th Annual International Conference on Mobile Computing and Networking, MobiCom ’20, New York, NY, USA. ACM.

[Wei et al., 2017] Wei, T., Zhou, A., and Zhang, X. (2017). Facilitating robust 60 ghz network deployment by sensing ambient reflectors. In Proceedings of the 14th USENIX Conference on Networked Systems Design and Implementation, NSDI’17, page 213–226, USA. USENIX Association.

[Zhao et al., 2020] Zhao, R., Woodford, T., Wei, T., Qian, K., and Zhang, X. (2020). M-cube: A millimeter-wave massive MIMO software radio. In Proceedings of the 26th Annual International Conference on Mobile Computing and Networking , MobiCom ’20, New York, NY, USA. ACM.